I was looking into setting up an instance of RDPWG for a client.

The client currently has a bunch of Windows server VMs in Workgroup which they currently connect to on port 3389 directly exposed to the internet which is obviously a massive security issue.

Additionally, the RDP port is often blocked on corporate networks.

The better option would be to set up an Active Directory domain and an RDP Gateway server, but as a quick alternative while the client decides if the cost is worth it I set up an RDP Gateway using the Open source project RDPGW.

The project itself is very interesting but the documentation is unfortunately seriously lacking and it took me quite a bit of effort to get everything set up, so I wanted to share the results in case it helps someone.

I didn’t find a way to get RDPGW to work behind a reverse proxy, so in this setup you need 2 public IP address if you intend to host Keycloak on port 443 unfortunately.

Setup

This is the docker-compose.yml file:

version: "3.9"

services:

postgres:

container_name: db

image: "postgres:14.4"

restart: always

healthcheck:

test: [ "CMD", "pg_isready", "-q", "-d", "postgres", "-U", "postgres" ]

timeout: 45s

interval: 10s

retries: 10

volumes:

- ./postgres_data:/var/lib/postgresql/data

#- ./sql:/docker-entrypoint-initdb.d/:ro # turn it on, if you need run init DB

environment:

POSTGRES_USER: postgres

POSTGRES_PASSWORD: postgres

POSTGRES_DB: keycloak

POSTGRES_HOST: postgres

networks:

- pgsql

keycloak:

container_name: keycloak

image: quay.io/keycloak/keycloak

command: ['start', '--proxy', "edge"]

restart: always

depends_on:

postgres:

condition: service_healthy

environment:

JAVA_OPTS_APPEND: -Dkeycloak.profile.feature.upload_scripts=enabled

KC_DB_PASSWORD: postgres

KC_DB_URL: "jdbc:postgresql://postgres/keycloak"

KC_DB_USERNAME: postgres

KC_DB: postgres

KC_HEALTH_ENABLED: 'true'

KC_HTTP_ENABLED: 'true'

KC_METRICS_ENABLED: 'true'

KC_HOSTNAME_STRICT_HTTPS: true

KC_HOSTNAME: rdgateway-keycloak.example.com

PROXY_ADDRESS_FORWARDING: 'true'

KEYCLOAK_ADMIN: admin

KEYCLOAK_ADMIN_PASSWORD: password

healthcheck:

test: ["CMD-SHELL", "exec 3<>/dev/tcp/127.0.0.1/8080;echo -e \"GET /health/ready HTTP/1.1\r\nhost: http://localhost\r\nConnection: close\r\n\r\n\" >&3;grep \"HTTP/1.1 200 OK\" <&3"]

interval: 10s

retries: 10

start_period: 20s

timeout: 10s

ports:

- "8080:8080"

- "8787:8787" # debug port

networks:

- pgsql

- keycloak

rdpgw:

image: bolkedebruin/rdpgw:latest

restart: always

container_name: rdpgw

ports:

- "80:80"

- "443:443"

volumes:

- ./rdpgw.yaml:/opt/rdpgw/rdpgw.yaml

depends_on:

keycloak:

condition: service_healthy

networks:

- keycloak

networks:

pgsql:

driver: bridge

keycloak:

driver: bridge

you need to create a postgres_data directory (or use a volume).

This is the rdpgw.yaml file (look a the documentation for information on secrets):

Server:

Tls: auto

GatewayAddress: rdgateway.example.com

Port: 443

Hosts:

- rdshost-1.com:3389

- rdshost-2.com:3389

RoundRobin: any

SessionKey: changeme

SessionEncryptionKey: changeme

Authentication:

- openid

OpenId:

ProviderUrl: https://rdgateway-keycloak.example.com/realms/rdpgw

ClientId: rdpgw

ClientSecret: 01cd304c-6f43-4480-9479-618eb6fd578f

Client:

UsernameTemplate: "{{ username }}"

NetworkAutoDetect: 1

BandwidthAutoDetect: 1

ConnectionType: 6

SplitUserDomain: true

Security:

PAATokenSigningKey: changeme

UserTokenEncryptionKey: changeme

VerifyClientIp: false

Caps:

TokenAuth: true

IdleTimeout: 10

EnablePrinter: true

EnablePort: true

EnablePnp: true

EnableDrive: true

EnableClipboard: true

The “hosts” section describes all the hosts the server allows access to.

Configuration

After all the files have been created in your chosen directory, you can run

docker compose up -dAfter some time it will bring up Postgres and then Keycloak. RDPGW will not come up before we’ve create the new realm in Keycloak.

You will need to handle the reverse proxying of Keycloak on your own, it is outside the scope of this article.

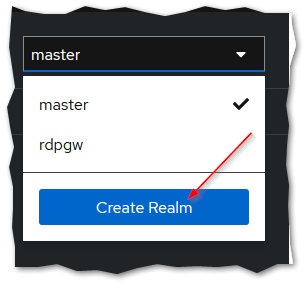

Login to Keycloak with the user you’ve defined in the configuration file and on the top left, create a new Realm:

There, you can import the Realm configuration from here.

It will create a realm named “rdpgw” with a single user named “admin@rdpgw” with password “admin” (which you should change promptly)

Once this is done, the RDPGW container should come up.

You should be able to use https://rdgateway.example.com/connect and login with the user “admin@rdpgw”.

It will download a .rdp file, which you should be able to open to connect to the first host in the list.

If you want to connect to a specific host, you should use https://rdgateway.example.com/connect?host=destination-host-url1.com